- Digital Governance in 50+ issues, 500+ actors, 5+ processes

AI technology and policy

ChatGPT has taken the world by storm in the last few months. It has been covered extensively in the media, became a topic for family dinner discussions, and sparked new debates on the good and bad of AI. Questions that have been asked before now seem more pervasive.

Will AI replace us? Are our societies ready to embrace the good that AI – ChatGPT included – has to offer while minimising the bad? Shall we pause AI developments – as Elon Musk, Yuval Harari, and others have called for in Open Letter? Whom do we have to answer governance and policy calls on AI? Should it be the UN, the US Congress, or the European Parliament or…?

As governments, international organisations, experts, businesses, users, and other explore these and similar questions, our coverage of AI technology and policy is meant to help you stay up-to-date with developments in this field, grasp their meaning, and separate hype from reality.

News / updates

Calls for investigation into ChatGPT technology by The European Consumer Organisation

UK Government invests £100 million to build safe and innovative AI technology

Russia’s Sberbank releases own Artificial Intelligence (AI) chatbot -GigaChat

About AI and its policy implications

Artificial intelligence (AI) might sound like something from a science fiction movie in which robots are ready to take over the world. While such robots are purely fixtures of science fiction (at least for now), AI is already part of our daily lives, whether we know it or not.

As the name suggests, AI systems are embedded with some level of ‘intelligence’ which makes them capable to perform certain tasks or replicate certain specific behaviours that normally require human intelligence. What makes them ‘intelligent’ is a combination of data and algorithms.

The technology and its many applications certainly carry significant potential for good, but there are also risks. Accordingly, the policy implications of AI advancements are far‐reaching. While AI can generate economic growth, growing concerns exist over the significant disruptions it could bring to the labour market. Issues related to privacy, safety, and security are also in focus. As there continue to be innovations in the field, more and more stakeholders are calling for AI standards and AI governance frameworks to help ensure that AI applications have minimal unintended consequences.

Study with Diplo

Governing AI

One overarching question is whether AI-related challenges (especially regarding safety, privacy, and ethics) call for new legal and regulatory frameworks, or whether existing ones can be adapted to address them.

If adapting current regulation was seen as the most suitable approach for some time being, things have started changing during the past two-three years. There are now multiple governance initiatives taking up at national, regional, and international levels.

USA Bill of Rights

The Blueprint for an AI Bill of Rights is a guide for a society that protects all people from these threats—and uses technologies in ways that reinforce our highest values. Responding to the experiences of the American public, and informed by insights from researchers, technologists, advocates, journalists, and policymakers, this framework is accompanied by From Principles to Practice—a handbook for anyone seeking to incorporate these protections into policy and practice, including detailed steps toward actualizing these principles in the technological design process.

China’s Regulation of Generative Artificial Intelligence

These Measures apply to research, development and utilization of generative artificial intelligence products to provide services to the public within the territory of the People’s Republic of China.

The generative artificial intelligence referred to in these Measures refers to technologies that generate text, pictures, sounds, videos, codes, and other content based on algorithms, models, and rules

EU's AI Act

Proposed by the European Commission in April 2021 and currently under negotiation at the level of EU institutions, the draft AI regulation introduces a risk-based regulatory approach for AI systems: if an AI system poses exceptional risks, it is banned; if an AI system comes with high risks (for instance, the use of AI in performing surgeries), it will be strictly regulated; if an AI system only involves limited risks, focus is placed on ensuring transparency for end users.

UNESCO Recommendation on AI Ethics

Proposed by the European Commission in April 2021 and currently under negotiation at the level of EU institutions, the draft AI regulation introduces a risk-based regulatory approach for AI systems: if an AI system poses exceptional risks, it is banned; if an AI system comes with high risks (for instance, the use of AI in performing surgeries), it will be strictly regulated; if an AI system only involves limited risks, focus is placed on ensuring transparency for end users.

OECD Guidelines

Proposed by the European Commission in April 2021 and currently under negotiation at the level of EU institutions, the draft AI regulation introduces a risk-based regulatory approach for AI systems: if an AI system poses exceptional risks, it is banned; if an AI system comes with high risks (for instance, the use of AI in performing surgeries), it will be strictly regulated; if an AI system only involves limited risks, focus is placed on ensuring transparency for end users.

Council of Europe work on a Convention on AI and human rights

In 2021 the Committee of Ministers of the Council of Europe (CoE) approved the creation of a Committee on Artificial Intelligence (CAI)tasked with elaborating a legal instrument on the development, design, and application of artificial intelligence (AI) systems based on the CoE’s standards on human rights, democracy and the rule of law, and conducive to innovation.

Group of Governmental Experts on Lethal Autonomous Weapons Systems

Within the UN System, for example, the High Contracting Parties to the Convention on Certain Conventional Weapons (CCW) established a Group of Governmental Experts on Lethal Autonomous Weapons Systems (LAWS), to explore the technical, military, legal, and ethical implications of LAWS.

Global Partnership on Artificial Intelligence

Australia, Canada, France, Germany, India, Italy, Japan, Mexico, New Zealand, the Republic of Korea, Singapore, Slovenia, the UK, the USA, and the EU have launched a Global Partnership on Artificial Intelligence (GPAI) – an international and multistakeholder initiative dedicated to guiding ‘the responsible development and use of AI, grounded in human rights, inclusion, diversity, innovation, and economic growth’.

In depth: Africa and artificial intelligence

Africa is making steps towards a faster uptake of AI, and AI-related investments and innovation are advancing across the continent. Governments are adopting national AI strategies, regional and continental organisations are exploring the same, and there is increasing participation in global governance processes focused on various aspects of AI.

National AI strategies and other governmental initiatives

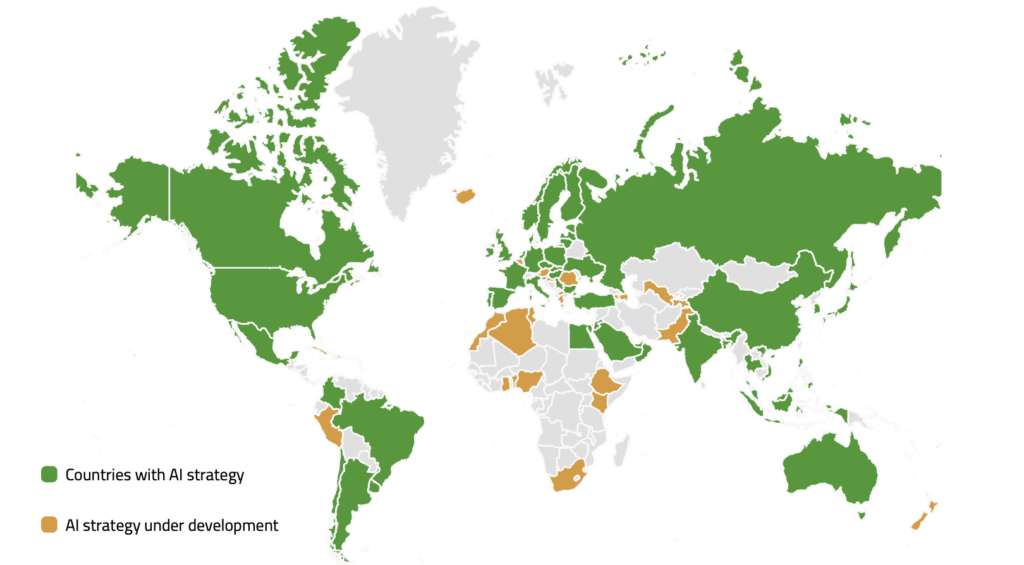

As AI technologies continue to evolve at a fast pace and have more and more applications in various areas, countries are increasingly aware that they need to keep up with this evolution and to indeed take advantage of it. Many are developing national AI development strategies, as well as addressing the economic, social, and ethical implications of AI advancements.

China, for example, released a national AI development plan in 2017, intended to help make the country the world leader in AI by 2030 and build a national AI industry worth $150 billion. The United Arab Emirates (UAE) also has an AI strategy, that aims to support the development of AI solutions for several vital sectors in the country, such as transportation, healthcare, space exploration, smart consumption, water, technology, education, and agriculture. The country has even appointed a State Minister for AI to work on ‘making the UAE the world’s best prepared [country] for AI and other advanced technologies’. In 2018, France and Germany were among the countries that followed this trend of launching national AI development plans.

These are only a few examples. There are many more countries working on such plans and strategies on an ongoing basis, as the map below shows.